Generative AI (GenAI) systems, such as ChatGPT, can help students as their personal tutor, by allowing them to study what interests them, by providing in depth explanations to topics they didn’t quite understand, by assessing their work and problems with it, and by providing shortcuts to parts of their work that aren’t directly relevant to what they want to learn. However, students sometimes misuse GenAI to derive answers for work they were supposed to conduct on their own as part of their learning, or accept its answers uncritically. For the first type of misuse part of the blame occasionally also lies with educators for giving out-of-class assignments that GenAI can perform with ease. For the second type of misuse students must learn to avoid using unverified GenAI output. Needless to say that in both cases the misuse of AI may also constitute academic fraud and violate their university’s code of conduct. Here is my take on the practicalities of the two cases.

Saying no to GenAI answers

For dealing with answers derived from GenAI educators should provide out-of-class assignments that require building on top of GenAI rather than parroting back its answers. Detecting whether some text has been written by GenAI is becoming ever more difficult, so I’ve stopped trying to detect it and confront my students for its use. Rather, I tell them that if their answers could have been given by GenAI then the value I give to them is minimal. This means that answers that were perfect in 2020 are no longer so today; the demands from the students’ work are now much higher due to the availability of GenAI.

As an example, consider a question in a Software Engineering course regarding the benefits that the Eclipse project gains from the use of software configuration management. A query to ChatGPT (4o) provides the following answer, which looks mostly correct, but is also generic and of minimal value, because it was derived automatically through a trivial prompt and tool anyone can use.

“Software Configuration Management (SCM) is crucial for complex projects like Eclipse, as it helps manage changes systematically and efficiently. Here are some of the key benefits that the Eclipse project gains from using SCM:

1. Version Control and Tracking:

- Eclipse is a large, modular project with many contributors. SCM systems (like Git) allow tracking changes made by different developers, making it easy to manage concurrent changes.

- Version history helps in understanding when and why changes were made, aiding debugging and audits.

2. Collaboration and Team Coordination:

- Eclipse involves contributions from developers across the globe. SCM facilitates seamless collaboration by allowing multiple people to work on the same codebase without conflicts.

- Branching and merging strategies help manage feature development, bug fixes, and releases in parallel.

3. Code Integrity and Safety:

- SCM enforces controlled access to code, reducing the chances of accidental overwrites or loss.

- Rollback capabilities allow reverting to a stable version if new changes break the build or introduce bugs.

4. Automated Build and Release Management:

- SCM integrates with build automation tools (like Jenkins), ensuring that code changes trigger automated builds and tests.

- This integration helps maintain a stable development environment, allowing for continuous integration and delivery.

5. Dependency Management:

- Eclipse projects often depend on external libraries and plugins. SCM ensures that specific versions of dependencies are linked to corresponding code versions, avoiding compatibility issues.

6. Change and Issue Tracking:

- SCM systems often integrate with issue tracking tools (like Bugzilla for Eclipse), linking code changes to reported bugs or feature requests.

- This traceability makes it easier to understand why a change was made and helps prioritize bug fixing.

7. Documentation and Release Notes:

- Automatic generation of changelogs and release notes from commit messages helps maintain comprehensive project documentation.

- This transparency is vital for users and developers to understand what has changed between versions.

8. Backup and Disaster Recovery:

- Storing code and changes in an SCM repository acts as a backup. Even if a local system crashes, the codebase is preserved.

- Distributed version control (like Git) provides redundancy by allowing every developer to have a complete copy of the repository.

9. Managing Forks and Contributions:

- The Eclipse Foundation often deals with forks and external contributions. SCM provides tools for handling pull requests, reviews, and merging.

- Contributors can easily fork the project, experiment, and submit changes without disrupting the mainline development.

10. License Compliance and Code Reviews:

- SCM tools can automatically check for licensing issues, ensuring that open-source compliance is maintained.

- Code review processes can be enforced through pull request workflows, improving code quality.

By leveraging SCM, the Eclipse project maintains a structured, collaborative, and scalable development process, ensuring that the complex ecosystem of plugins, tools, and core functionality remains robust and maintainable.”

What I expect from my students is to dig deeper and provide concrete, specific, quantitative and qualitative data that support the above claims. For example, for claim 1 they can measure the number of Eclipse contributors the size of Eclipse, and the number of commits and contributors per month, also showing me how they derived those numbers. For claim 2 they can visualize branches and merges to illustrate coordination. For claim 3 they can look at reverted commits, and also examine why they were employed.

The beauty of such assessment (which I clarify at the course’s beginning) is that it remains in sync with GenAI advancements. No matter what GenAI can do, the students are required to be one step ahead, something that will also be needed in their workplace.

Avoiding GenAI errors

The problem of students uncritically accepting erroneous GenAI results can be lessened by encouraging students to use GenAI in an environment where they can learn from its mistakes. This can happen through program crashes or failing test cases when GenAI writes code for them, or by carefully studying their answers and confronting them when they present incorrect results. The last approach requires from us educators very careful reading and attentiveness, because, in contract with sloppy and clearly incorrect answers students would provide before GenAI, GenAI can provide polished, plausible on the surface but (often only subtly) incorrect answers.

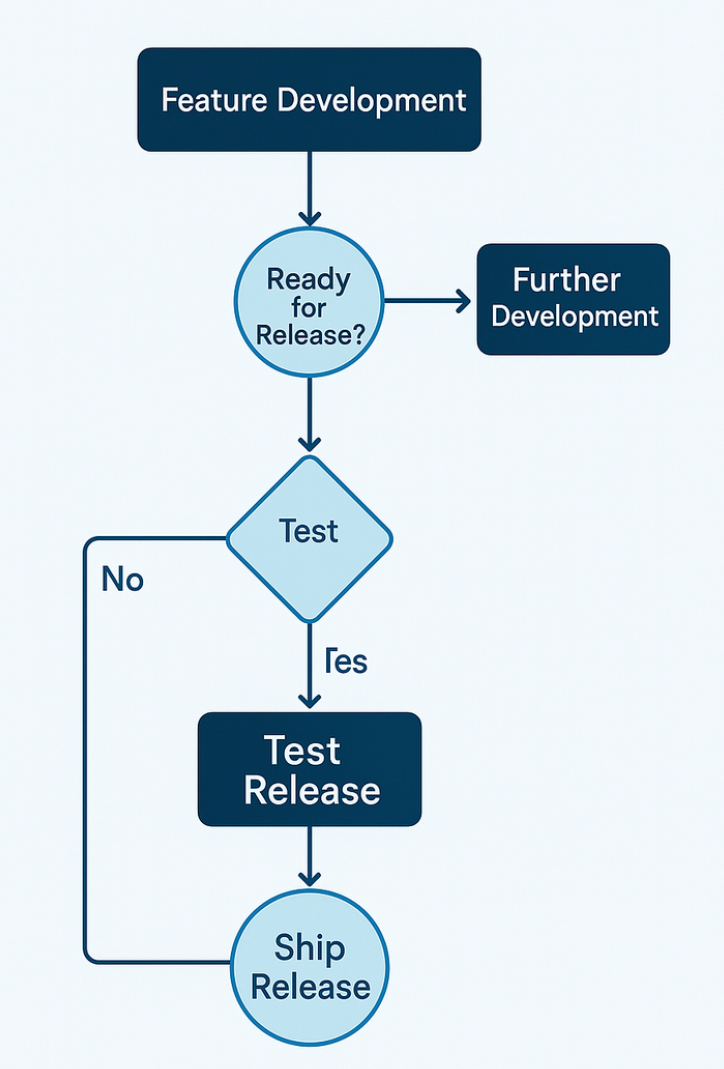

As an example, consider the following diagram, which a student team presented in class concerning JUnit’s release management.

A quick look at the slide while the students present it in class might not trigger an alarm, but if you study the chart carefully you realize it’s completely bogus.

- Both a diamond and a circle are used as decision points.

- There are no labels for the “Ready for Release” decision arrows.

- The “Ready for Release” actions don’t correspond to Yes/No.

- Failed tested software is directly released (or after release the software is tested again) — it’s impossible to say, because the line lacks an arrow.

- There’s a decision on whether to test the software before release.

- Failed tests aren’t handled.

As the columnist Bill Vaughan wrote in 1969, “To err is human, but to really foul things up you need a computer.

During the break I discussed with the student who presented the chart, and he admitted that it was created by GenAI through a correct prompt that he created and provided, so he assumed that the chart (which he might think was trivial to generate) would also be correct. I’m sure he has now learned no to trust blindly GenAI answers.

Comments Post Toot! TweetUnix make vs Apache Airflow (2024-10-15)

How (and how not) to present related work (2024-08-05)

An exception handling revelation (2024-02-05)

Extending the life of TomTom wearables (2023-09-01)

How AGI can conquer the world and what to do about it (2023-04-13)

Twitter’s overrated dissemination capacity (2023-04-02)

The hypocritical call to pause giant AI (2023-03-30)

AI deforests the knowledge’s ecosystem (2023-03-16)

How I fixed git-grep macOS UTF-8 support (2022-10-12)

Last modified: Friday, April 11, 2025 6:31 am

Unless otherwise expressly stated, all original material on this page created by Diomidis Spinellis is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.